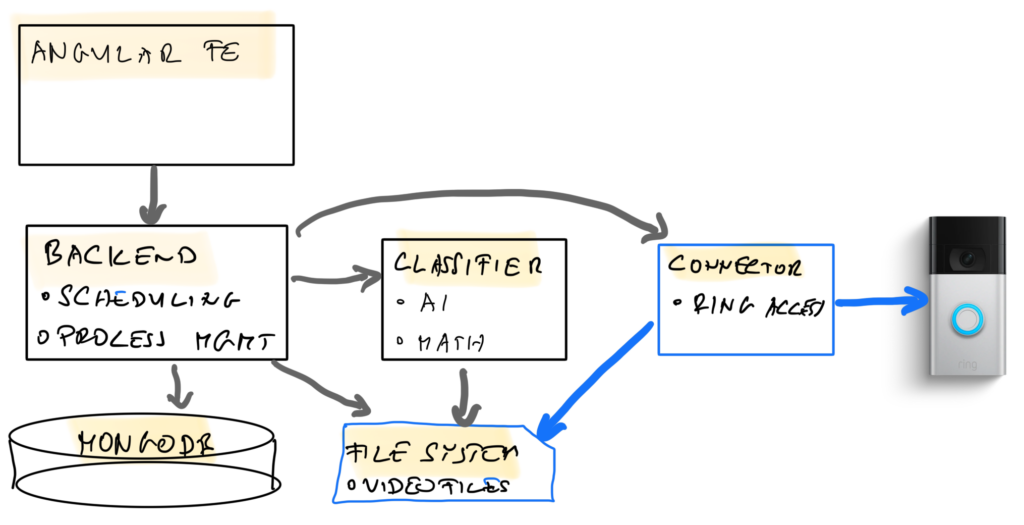

In my previous posts, I was evaluating how to enhance the ring doorbell with face recognition features. Now, after running the system on my local servers for few years, it is time to move the solution into the cloud. I chose Google Cloud (GCP), and in the next posts, I will show what steps need to be taken to move this solution to GCP.

To save costs, and cater for the low load environment, we will be using Cloud functions for most of the services. Also, we will need to replace the use of local file system with Cloud Storage, and set up the security of the application with the combination of Cloud Secret Manager, Identity Aware Proxy and Google Auth in Angular.

To ease the setup of all this infrastructure, I plan to set up Terraform scripts for most of the infrastructure, and run the Terraform from GitHub Actions.

Ring Connector as Cloud function

The connector is tasked with accessing the Ring REST API, and downloading the video files to the local file system. Both these connections, depicted blue below, will need adjustment for the cloud. Also, we will deploy the connector itself as a cloud function, to take advantage its infrequent invocation (once per door visitor).

In the subsequent chapters, following changes will be introduced to the connector:

- Secret Manager to load and update RING auth info

- Custom Service Account to provide the identity of the Cloud Function

- Deploy as Cloud Function, and run as Cloud Function emulation locally

- Authentication via ADC with User Credentials locally, and Service Account on Cloud Function

- Storing the data to Cloud Storage

Lets take on these changes one by one

While we used a local file for the ring authentication for the dockerized application, this approach is not valid for GCP. Instead, we will store all credentials in the Secret Manger (GSM). We will read the access token of ring in GSM (line 1). We will need to use Application default credentials (ADC) for accessing this secret manager form the Python function of the Connector (7). The ADC, if configured correctly, is used by the SecretManagerServiceClient automatically.

However, the access token will need regular refresh, requiring the write access to the GSM secret (26).

def access_secret_version(project_id, secret_id, version_id="latest"):

"""

Accesses the payload of the specified secret version.

"""

logging.debug(f"Accessing the GSM at projects/{project_id}/secrets/{secret_id}/versions/{version_id}")

client = secretmanager.SecretManagerServiceClient()

name = f"projects/{project_id}/secrets/{secret_id}/versions/{version_id}"

response = client.access_secret_version(request={"name": name})

payload = response.payload.data.decode("UTF-8")

return payload

def load_ring_auth_json():

logging.info("Loading the ring auth file")

project_id = config("GCP_PROJECT")

secret_id = config("RING_AUTH_SECRET_ID")

secret_string = access_secret_version(project_id, secret_id)

logging.debug(f"Secret projects/{project_id}/secrets/{secret_id} accessed successfully")

config_data = json.loads(secret_string)

return config_data

def add_secret_version(project_id, secret_id, new_secret_data):

"""

Adds a new version to the specified secret with the given data.

"""

client = secretmanager.SecretManagerServiceClient()

parent = client.secret_path(project_id, secret_id)

# Convert data to bytes

payload = new_secret_data.encode("UTF-8")

# Add the secret version

response = client.add_secret_version(request={"parent": parent, "payload": {"data": payload}})

return response

def update_ring_auth_json(token):

logging.warn("UPDATING the ring auth file")

project_id = config("GCP_PROJECT")

secret_id = config("RING_AUTH_SECRET_ID")

# New secret data

new_secret_data = json.dumps(token)

# Add a new version

response = add_secret_version(project_id, secret_id, new_secret_data)

print(f"Added new secret version: {response.name}")

The other major change is the replacement of the locally mounted file system with Cloud Storage (GCS). Again, the ADC will take care of access control to the storage bucket (23). We will store both the mp4 and the json description in the /video/ folder of the GCS.

BUCKET_NAME = config("BUCKET_NAME")

def save_mp4_to_gcs(content, filename):

# If content is a requests Response object, extract the content

if hasattr(content, 'content'):

content = content.content

# Use a BytesIO wrapper for the content and upload it to GCS

blob(filename).upload_from_file(io.BytesIO(content), content_type='video/mp4')

def save_json_to_gcs(data, filename):

# Convert the Python dictionary to JSON string

if isinstance(data, dict):

data = json.dumps(data)

# Upload the JSON string to GCS

blob(filename).upload_from_string(data, content_type='application/json')

def blob(filename):

# Initialize a client

storage_client = storage.Client()

# Get the bucket

bucket = storage_client.bucket(BUCKET_NAME)

# Create a blob (GCS file) in the bucket

return bucket.blob(filename)

While it is possible and relatively simple to set up these objects via the cloud console, we will opt for the infrastructure as code solution via Terraform. The complete code is available on GitHub.

We are going to

- activate the required APIs on the GCP project

- create the service account to power the cloud function

- create the Cloud Storage to hold the mp4 videos from Ring

- create the Secret Manager secret to access the Ring API

resource "google_project_service" "services" {

for_each = toset([

"artifactregistry.googleapis.com",

"bigquery.googleapis.com",

"bigquerymigration.googleapis.com",

"bigquerystorage.googleapis.com",

"cloudapis.googleapis.com",

"cloudbuild.googleapis.com",

"cloudfunctions.googleapis.com",

"cloudtrace.googleapis.com",

"containerregistry.googleapis.com",

"datastore.googleapis.com",

"logging.googleapis.com",

"monitoring.googleapis.com",

"pubsub.googleapis.com",

"run.googleapis.com",

"secretmanager.googleapis.com",

"servicemanagement.googleapis.com",

"serviceusage.googleapis.com",

"sql-component.googleapis.com",

"storage-api.googleapis.com",

"storage-component.googleapis.com",

"storage.googleapis.com",

])

project = var.project_id

service = each.value

}Due to fine grained API concept, there is a rather large list of APIs to activate. The service account itself is easy to create

resource "google_service_account" "connector_sa" {

account_id = "connector-sa"

display_name = "Connector Service Account"

}Now we can create the bucket, and permission the service account to access the bucket to store the downloaded files.

resource "google_storage_bucket" "ringface_data_bucket" {

name = var.data_bucket_name

location = "europe-west3"

storage_class = "STANDARD"

}

resource "google_storage_bucket_iam_binding" "bucket_iam_binding" {

bucket = google_storage_bucket.ringface_data_bucket.id

role = "roles/storage.objectUser"

members = [

"serviceAccount:${google_service_account.connector_sa.email}",

]

}The secret manager will hold an initial access and refresh token for Ring API. We will grant both read and write access to the service account: the latter will be used to keep the token up to date.

resource "google_secret_manager_secret" "ring_auth_secret" {

secret_id = "RING_AUTH_SECRET"

replication {

auto {}

}

}

resource "google_secret_manager_secret_version" "ring_auth_secret_version" {

secret = google_secret_manager_secret.ring_auth_secret.id

secret_data = file("${path.module}/oauth-authorization.json")

}

resource "google_secret_manager_secret_iam_binding" "secret_accessor" {

secret_id = google_secret_manager_secret.ring_auth_secret.id

role = "roles/secretmanager.secretAccessor"

members = [

"serviceAccount:${google_service_account.connector_sa.email}",

]

}

resource "google_secret_manager_secret_iam_binding" "secret_version_adder" {

secret_id = google_secret_manager_secret.ring_auth_secret.id

role = "roles/secretmanager.secretVersionAdder"

members = [

"serviceAccount:${google_service_account.connector_sa.email}",

]

}After executing these changes via a local Terraform runner, we can now publish our first cloud function. This will also be done via a local gcloud command

gcloud functions deploy download-ring-video \

--gen2 \

--project $PROJECT \

--service-account connector-sa$PROJECT.iam.gserviceaccount.com \

--runtime python310 \

--source=. \

--entry-point=download \

--trigger-http \

--region=europe-west3 \

--allow-unauthenticated \

--env-vars-file .env.yaml

# cat .env.yaml

# RING_AUTH_SECRET_ID: RING_AUTH_SECRET

# GCP_PROJECT: "123456578"

# BUCKET_NAME: something_you_defined_in_terraform.tfvarsAt this stage, the connector is deployed to GCP, and the function can be tested. The function is not protected yet, so you can invoke it via the following command. Expect it to download all the todays events at your door into the cloud storage bucket.

curl https://europe-west3-$PROJECT.cloudfunctions.net/download-ring-video

# [{"answered":false,"createdAt":"2023-12-13T18:17:21.000000Z","date":"20231213","duration":30.0,"eventName":"20231213-181721","kind":"ding","ringId":"7312145060820735839","status":"UNPROCESSED","videoFileName":"videos/20231213-181721.mp4"},{"answered":false,"createdAt":"2023-12-13T15:02:38.000000Z","date":"20231213","duration":30.0,"eventName":"20231213-150238","kind":"ding","ringId":"7312094882717816671","status":"UNPROCESSED","videoFileName":"videos/20231213-150238.mp4"},{"answered":false,"createdAt":"2023-12-13T09:46:31.000000Z","date":"20231213","duration":32.0,"eventName":"20231213-094631","kind":"ding","ringId":"7312013420073113439","status":"UNPROCESSED","videoFileName":"videos/20231213-094631.mp4"}]

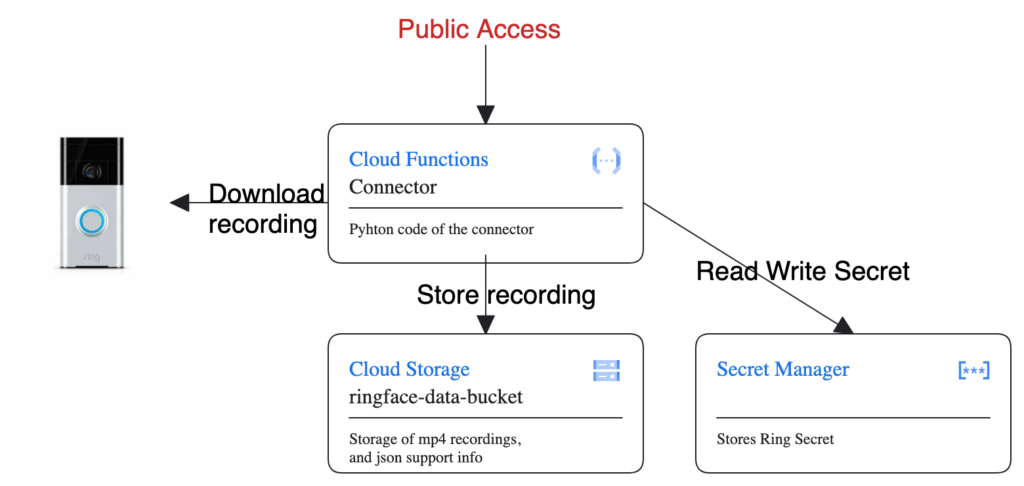

At this moment we have a working GCP infrastructure in place, which can be simplified as following

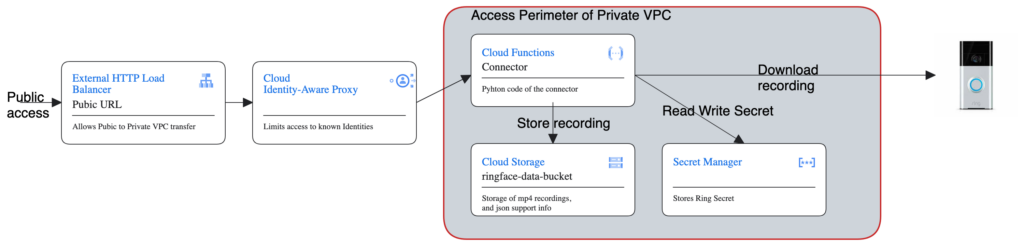

Obviously, the public access to this private service is not the best choice, and we will need to address this with an Identity Aware Proxy (IAP). IAP is a great way to keep the Auth related code in a separate component, instead of adding this code to the mission oriented Connector code. We will modify the above diagram as follows

While there is a full Terraform support fore setting up the Load Balancing, the IAP is not covered in Terraform, and manual setup is needed. Lets take the load balancing first. In itself, there is no Load Balancer as GCP object. Instead, it is comprised of the chain of Network Endpoint Group, Backend Service, Url Map, Http Proxy and Forwarding Rule components.

resource "google_compute_region_network_endpoint_group" "ringface_neg" {

name = "${var.project_id}-neg"

network_endpoint_type = "SERVERLESS"

region = "europe-west3"

cloud_run {

service = var.cloud_run_connector

}

}

resource "google_compute_backend_service" "ringface_backend_service" {

name = "${var.project_id}-backend-service"

protocol = "HTTPS"

load_balancing_scheme = "EXTERNAL"

timeout_sec = 30

backend {

group = google_compute_region_network_endpoint_group.ringface_neg.id

}

}

resource "google_compute_url_map" "ringface_url_map" {

name = "${var.project_id}-url-map"

default_service = google_compute_backend_service.ringface_backend_service.id

}

resource "google_compute_managed_ssl_certificate" "ringface_ssl_certificate" {

name = "${var.project_id}-ssl-certificate"

managed {

domains = [var.dns-to-global-ip]

}

}

resource "google_compute_target_https_proxy" "ringface_target_proxy" {

name = "${var.project_id}-target-proxy"

url_map = google_compute_url_map.ringface_url_map.id

quic_override = "NONE"

ssl_certificates = [google_compute_managed_ssl_certificate.ringface_ssl_certificate.self_link]

}

resource "google_compute_global_forwarding_rule" "ringface_forwarding_rule" {

name = "${var.project_id}-forwarding-rule"

target = google_compute_target_https_proxy.ringface_target_proxy.id

load_balancing_scheme = "EXTERNAL"

port_range = "443"

# ip_address = var.ip_address_name

ip_address = google_compute_global_address.ringface_global_ip.address

ip_protocol = "TCP"

}IAP needs to be activated manually on the backend service, and the DNS entry, that points to the IP of the forwarding rule must also be created manually.

The code of these changes is fully available in GitHub (Terraform, Connector) Next we will look into deploying the Classifier component on GCP